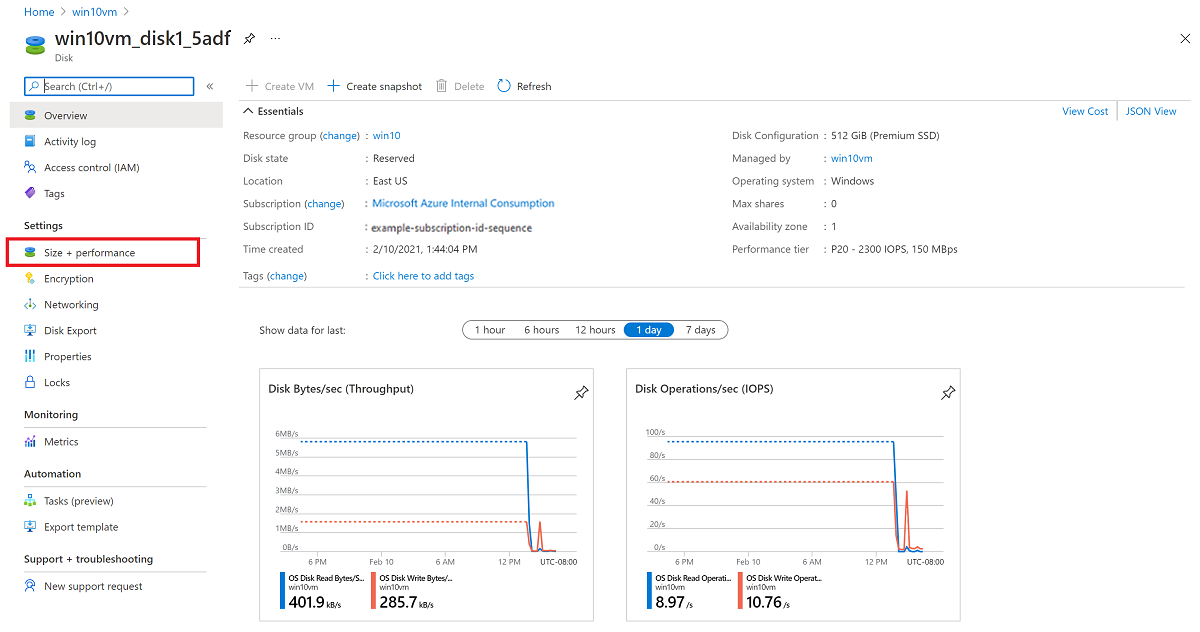

Settings -> Disks

Settings -> Properties -> Agent status : Not Ready or Ready

TTY

Sys REQ

Grub Loader

Serial Console

sysctl -a |grep -i sysrq

https://www.youtube.com/watch?v=KevOc3d_SG4&t=147s

https://www.youtube.com/watch?v=HnvUxnNzbe4

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/serial-console-grub-proactive-configuration

https://www.kernel.org/doc/html/latest/admin-guide/sysrq.html

waagent

https://github.com/Azure/WALinuxAgent

The Microsoft Azure Linux Agent (waagent) manages Linux provisioning and VM interaction with the Azure Fabric Controller.

What is Azure fabric?

Azure Service Fabric is a distributed systems platform that makes it easy to package, deploy, and manage scalable and reliable microservices and containers

Operations ->

Monitoring ->

Automation ->

Support + troubleshooting -> Resource health

Support + troubleshooting -> Boot diagnostics

Support + troubleshooting -> Performance diagnostics

Support + troubleshooting -> Serial console

Connect -> Connect with Bastion Host

https://docs.microsoft.com/en-us/azure/bastion/tutorial-create-host-portal

https://www.rebeladmin.com/2019/11/step-step-guide-access-azure-vms-securely-using-azure-bastion/

Role

https://docs.microsoft.com/en-us/azure/role-based-access-control/check-access

Azure VM -> Support + troubleshooting -> Boot diagnostics

Boot Diagnostics

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/serial-console-grub-single-user-mode

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/boot-diagnostics

https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles#virtual-machine-contributor

GrubLoader Issue

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/troubleshoot-vm-boot-error

https://gutsytechster.wordpress.com/2018/07/24/how-to-resolve-grub-error-file-grub-i386-pc-normal-mod-not-found/

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/serial-console-grub-proactive-configuration

https://www.youtube.com/watch?v=KevOc3d_SG4

https://askubuntu.com/questions/266429/error-file-grub-i386-pc-normal-mod-not-found

10.79.202.45

10.79.202.5

fda

Rage@1234567

azureubuntu

azlinux

R....1......e2021

GRand Unified Bootloader (GRUB) is likely the first thing you see when you boot a virtual machine (VM). Because it's displayed before the operating system has started, GRUB isn't accessible via SSH. In GRUB, you can modify your boot configuration to boot into single-user mode, among other things.

REISUB

az vm start -g CORA-AI -n FDA-VEA

az vm restart -g CORA-AI -n FDA-VEA

az vm restart -g CORA-AI -n FDA-VEA --force --no-wait

az serial-console send reset -g CORA-AI -n FDA-VEA

az serial-console send reset -g CORA-AI -n FDA-VEA

az vm boot-diagnostics get-boot-log -g CORA-AI -n FDA-VEA

az serial-console connect -g CORA-AI -n FDA-VEA

az serial-console send reset -g CORA-AI -n FDA-VEA

az vm boot-diagnostics enable -g CORA-AI -n FDA-VEA

--------------------------------------------------------------------------

az disk list --query '[?managedBy==`null`].[id]' -o tsv -g CORA-AI

id=

az disk delete --ids $id --yes

--------------------------------------------------------------------------

$subscriptionId=$(az account show --output=json | jq -r .id)

az resource show --ids "/subscriptions/$subscriptionId/providers/Microsoft.SerialConsole/consoleServices/default" --output=json --api-version="2018-05-01" | jq .properties

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

dmesg | grep SCSI

https://docs.microsoft.com/en-us/azure/virtual-machines/boot-diagnostics

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

https://eaasblobstorage.blob.core.windows.net/default/SOP%20-%20Reimb%20(002).pdf?sp=r&st=2021-08-10T09:09:09Z&se=2021-08-10T17:09:09Z&spr=https&sv=2020-08-04&sr=b&

sig=E%2BDVesrpOud11k%2Ftb5rjbdevUHkmruAU17Llnskjq9s%3D

sp=r&st=2021-08-10T09:09:09Z&se=2021-08-10T17:09:09Z&spr=https&sv=2020-08-04&sr=b&sig=E%2BDVesrpOud11k%2Ftb5rjbdevUHkmruAU17Llnskjq9s%3D

https://eaasblobstorage.blob.core.windows.net/

Connection string (Key or SAS)

https://eaasblobstorage.blob.core.windows.net/

sv=2020-04-08&ss=b&srt=sco&st=2021-08-10T09%3A16%3A31Z&se=2022-08-10T09%3A16%3A00Z&sp=rwdxftlacup&sig=NfSV5%2F9wbqXlBAhGBGxek8RAw723PYERkBgLe009Ifk%3D

sv=2020-04-08&ss=b&srt=sco&st=2021-08-10T09%3A16%3A31Z&se=2022-08-10T09%3A16%3A00Z&sp=rwdxftlacup&sig=NfSV5%2F9wbqXlBAhGBGxek8RAw723PYERkBgLe009Ifk%3D

SharedAccessSignature=sv=2020-04-08&ss=b&srt=sco&st=2021-08-10T09%3A16%3A31Z&se=2022-08-10T09%3A16%3A00Z&sp=rwdxftlacup&sig=NfSV5%2F9wbqXlBAhGBGxek8RAw723PYERkBgLe009Ifk%3D;BlobEndpoint=https://eaasblobstorage.blob.core.windows.net/;

eaasblobstorage

SharedAccessSignature=sv=2020-04-08&ss=b&srt=sco&st=2021-08-10T09%3A16%3A31Z&se=2022-08-10T09%3A16%3A00Z&sp=rwdxftlacup&sig=NfSV5%2F9wbqXlBAhGBGxek8RAw723PYERkBgLe009Ifk%3D;BlobEndpoint=https://eaasblobstorage.blob.core.windows.net/;

Query string:

?sv=2020-04-08&ss=b&srt=sco&st=2021-08-10T09%3A16%3A31Z&se=2022-08-10T09%3A16%3A00Z&sp=rwdxftlacup&sig=NfSV5%2F9wbqXlBAhGBGxek8RAw723PYERkBgLe009Ifk%3D

How will you connect to the storage account?

Connection string (Key or SAS)

Shared access signature URL (SAS)

Account name and key

?sv=2020-08-04&ss=b&srt=sco&sp=rwdlactfx&se=2022-08-10T17:52:53Z&st=2021-08-10T09:52:53Z&spr=https&sig=Iiz0%2FBxajPuPU9mBbbvb1OIw5dviL%2BzOkqVL%2Ft1wh3U%3D

https://eaasblobstorage.blob.core.windows.net/?sv=2020-08-04&ss=b&srt=sco&sp=rwdlactfx&se=2022-08-10T17:52:53Z&st=2021-08-10T09:52:53Z&spr=https&sig=Iiz0%2FBxajPuPU9mBbbvb1OIw5dviL%2BzOkqVL%2Ft1wh3U%3D

SharedAccessSignature=sv=2020-08-04&ss=b&srt=sco&sp=rwdlactfx&se=2022-08-10T17:52:53Z&st=2021-08-10T09:52:53Z&spr=https&sig=Iiz0%2FBxajPuPU9mBbbvb1OIw5dviL%2BzOkqVL%2Ft1wh3U%3D;

BlobEndpoint=https://eaasblobstorage.blob.core.windows.net/;

------------------------

SharedAccessSignature=sv=2020-08-04&ss=b&srt=sco&sp=rwdlactfx&se=2022-08-10T17:52:53Z&st=2021-08-10T09:52:53Z&spr=https&sig=Iiz0%2FBxajPuPU9mBbbvb1OIw5dviL%2BzOkqVL%2Ft1wh3U%3D;BlobEndpoint=https://eaasblobstorage.blob.core.windows.net/

------------------------